Nassim N. Taleb dropped some truth in his recent piece for Wired, “Beware the Big Errors of Big Data”, letting us silly scientists (of data and social flavours) and statisticians in on a startling observation: with more data comes more noise, and more cherry-picking, and more false results.

Except he hasn’t, and it doesn’t, and it won’t.

Let me present a more nuanced rebuttal, firstly with the caveat that I’m not a statistician, and so I’m only scratching the surface on that count. What’s his argument and why do I disagree with it? I would characterise his argument as being that big data is full of “noise” which is being interpreted as meaningful patterns and sold on to unsuspecting people as such by unscrupulous hucksters.

My problem with it is that it obscures a lot of subtlety to make obvious points. Point 1: Big Data allows “researchers” to “cherry pick”. Ok, but researchers can act in bad faith outside of big data. Our baseline assumption should be that researchers act in good faith, or at least we need checks and measures to prevent this. What’s special about big data? Well it’s so big and data-y that researchers might not know they’re doing this, or we have no way of catching them out because methods don’t exist to test these things.

Oh wait, they do. It’s called “statistics”.

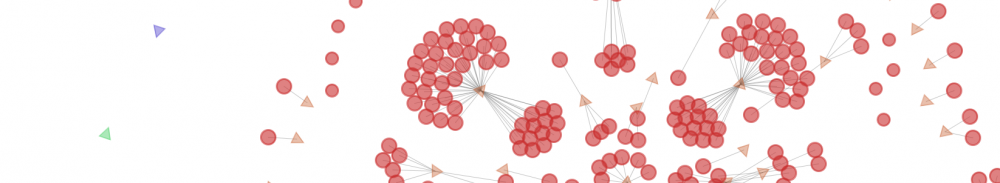

One of the elisions Taleb’s made is between quantity of data and dimension of data. A high dimensional dataset might be someone’s Nectar card, say, where for each individual, we have thousands of pieces of information. I, or some hypothetical researcher, might look at their nectar card purchases, and that of their spouse, say, and conclude that people who buy New Girl DVDs are more likely to drink cheap lager. This would be a ridiculous extrapolation from a small group, and is related to the phenomenon of overfitting – assuming too much about your population from your sample.

One very simple way to deal with this is to collect more data. For our hypothetical Nectar card researcher, he might look at his children’s expenditure and notice no correlation between their consumption of cheap lager and their New Girl DVD habit. They might drink loads of cheap lager and never once see a Zooey Deschanel DVD. So the silly hypothesis is nipped in the bud.

If you don’t want to go out and collect new data, you can split your existing dataset – using one portion to form hypotheses, and another part to test it. There are lots of ways to do this, because – guess what – statisticians have been thinking about this problem for upwards of a hundred years and the concept of overfitting wasn’t invented during a TED talk.

I digress – this is just one way I know of dealing with “cherry picking”. Statisticians use many more. (@vonaurum has been particularly helpful on twitter in conversation, he’s made various comments on the effects of Boosting methods to reduce overfitting – but I’m not very knowledgeable on that subject, so I’ll leave those to him to elucidate).

The second point is that not all data is high-dimensional like Nectar data. (Statisticians also have techniques to reduce dimensions and simplify the information). Big data can just mean number of participants, or timepoints, which doesn’t necessarily imply high dimensionality.

On one level, Taleb is correct – very few people really understand stats (myself included!) and so we should be very cautious of the analyses we use. There are real issues about the quality of published data, and he links to studies on the subject. On the other hand, there’s a serious body of work behind getting this right, and painting data scientists as being deluded scoundrels does no one any favours. We shouldn’t say “oh noes” and give up – we should try harder to engage with the serious and significant scholarship that’s been done, and incorporate it into mainstream practice.

[EDIT: The original piece contained a couple of sentences that were rather dismissive of Taleb, and led to him having a massive go at me on twitter and calling me an imbecile, which I thought was excessive, but each to their own. I’ve let the original article stand for eight months so you can see what I wrote, but it doesn’t add much to a piece which is fairly simple and one-sided anyway, so I’ve taken that bit out. I still think the Wired piece this responds to was simplistic too, and I object more to that than his general body publications, which I don’t know, so I’ve removed (some of) the snark.]

Though this, it seems that this shouldn’t be fundamental issue of big data. In fact, for any data, if you are given garbage input, no doubt you will recieve garbage output. That’s what we don’t want.